Visually-guided hearing aid: Hearing in plain sight

A prototype of a visually-guided hearing aid (VGHA) designed at Boston University of a the formed beam is manipulated by eye gaze to improve the ability of users of the technology to better focus on one sound despite the presence of nearby competing sounds.

The concept of a visually guided hearing aid (VGHA) is intriguing and encompasses a number of different disciplines that are not for the faint of heart considering the complexity of the technologies being brought together.

While not a new concept, the VGHA, which merged out of work that was progressing on spatial hearing in the Psychoacoustics Laboratory at Boston University, Boston, is a prototype currently used for research that incorporates acoustic beamforming, a way of harnessing audio-frequency signals to focus amplification of a signal from a desired direction.

Kidd. Photo courtesy of Chitose Suzuki, Boston University.

In this case, in the VGHA, the formed beam is manipulated by eye gaze to improve the ability of users of the technology to better focus on one sound despite the presence of nearby competing sounds.

“One of the early concepts that motivated this work was the finding that people can listen very selectively in space," explained Gerald Kidd Jr., PhD, professor, Department of Speech, Language & Hearing Sciences, and director of the Psychoacoustics Laboratory, Boston University. "Along the dimension left to right, i.e., azimuth, they can focus their attention on a particular point of interest and attenuate sources of sound that are off of the axis of the focus of attention.”

The evidence for this spatial tuning effect with natural hearing, first described in 2000,1 then moved into studies on hearing loss and improving hearing aids.

“In considering how we could improve individuals’ hearing in situations in which there are multiple spatially distributed sources of sound, which has been a perennial problem for people who wear hearing aids, we worked on devising an algorithm to replicate normal spatial hearing for those who cannot hear well, that is, by separating the sources in azimuth,” Kidd said.

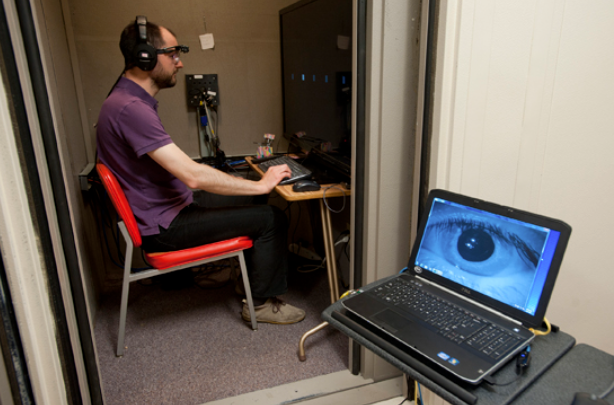

Research engineer Sylvain Favrot wears the portable eye-tracker component of the visually guided hearing aid (VGHA) developed by Gerald Kidd, PhD, and other researchers. Photo by Cydney Scott, courtesy of Boston University.

Moving down the beamforming path

Kidd and his colleagues worked in collaboration with researchers from Sensimetrics Corporation of Malden, MA, which devised working on beamforming as a method to improve hearing aids by enhancing the signal-to-noise ratio for sounds that are immediately in front of the beamformer.

This research extended earlier work at the Research Laboratory of Electronics at the Massachusetts Institute of Technology (MIT), Cambridge, Massachusetts.2 One option was to mount microphones on a spectacle frame.

Working with Joseph Desloge, PhD, the 2 groups identified that a problem with a beamformer mounted on a spectacle frame was the inability to move it from 1 place to another to, for example, follow a conversation in a group of people.

The listener’s head would have to move each time a different individual spoke to point the beamformer in the direction of the speaker.

Another method of steering the beamformer also was considered by using a hand dial or phone controls to move the beam into a desired direction.

However, the most natural way of steering the beam was in plain sight—by moving the beam with eye gaze. The linking of the auditory and visual attention thus was born and the 2 moved in tandem from left to right with the change in the source of the sound.

This was considered to be a powerful way to improve amplification for people with hearing loss, Kidd explained.

VGHA components

To do this, Kidd and Desloge eventually settled on a system that used the signals from a commercially available eye tracker combined with a custom-made microphone array comprised of 4 rows of 4 microphones flush-mounted on a flexible band that can be positioned across the top of the user’s head.3

Kidd explained that the outputs from the microphones (the acoustic component) are combined using an algorithm that was applied to audio beamforming devised by the MIT group among others.

“This configuration optimizes the response of the microphone array to the direction chosen by the user,” he said.

The signals from the microphone array are processed in a way to be maximally responsive to an azimuth that is determined by gaze that is detected by the eye tracker.

A requirement of the eye tracker is that it has both a world-view camera that points outward and a camera that points inward to track pupillary location.

The 2 used together calibrate where the eyes are positioned relative to where the external camera thinks the eyes are positioned, he explained.

Associated software contains a previously measured set of head-related impulse responses.

“These responses provide the values across frequency for the algorithm that determines the optimal phase response, or time delay, and amplitude used to weight the response of each microphone to optimize the responsiveness to a particular azimuth,” Kidd described.

After positioning the system on a mannikin and obtaining a recorded set of impulse responses from a number of different locations in the front hemifield, the resolution obtained was good.

When placed on a user, the individual senses, using the eye tracker, the angle at which the eyes are positioned and selects the head-related transfer function that corresponds to that azimuth and convolves that with the approaching stimulus.

“This provides a very highly directional response that is appropriate as if the sound were coming from that location,” he stated.

The filtering function of the VGHA is sharpest at the higher frequencies (short wavelengths) and broadest at the lower frequencies (long wavelengths), resulting in the beamformer being most sharply tuned at higher frequencies.

Current status of the VGHA

Kidd explained that, for sounds that fall outside the focus of the beam, they are sharply attenuated at high frequencies and less attenuated at low frequencies, depending on the distance from the focus.

"This typically alters the quality of the sounds that are off-axis a bit," he said. "However, the beamformer can provide a great improvement in the signal-to-noise ratio for nearby sound sources and in rooms with good acoustics.”

This technology seems to be most effective when used in a small conference room with people sitting around a table or in a “cocktail party” scenario, in which selective hearing can be impaired when multiple people are speaking simultaneously.

In larger settings—such as at a concert or a play—the technology currently would not be beneficial for selecting out 1 speaker from among others at a greater distance.

“The basic physics of this technology is that it will be more beneficial for nearby sound sources,” Kidd said.

A step forward

A very recent beneficial advance in Kidd’s research has been the development of a triple beamformer that should provide enhanced hearing for individuals with cochlear implants.4

By way of comparison, the original beam former had a single channel output, he explained, that was comprised of 1 spatial filter used to provide sound to 1 or both ears but without any difference in the sound that went to the 2 ears.

“With single channel output, while hearing is enhanced, our natural binaural hearing that provides a big advantage in locating sounds is lost," he said.

The investigators’ latest focus uses multiple beams to focus on the primary sound source of interest. A second beam that is pointed to the right funnels sound only to the right ear, and a third beam pointed to the left funnels sound only to the left ear.

“This approach restores some of the normal binaural hearing in addition to the benefit of the original beam former,” he explained.

That restoration, the major feature of the triple beamformer, works by improving the signal-to-noise ratio of the single channel beam and improves the spatial hearing, by improving the ability to locate sound sources outside of the beam.5

In patients with bilateral cochlear implants, the devices work mostly independently of each other, which results in loss of a great deal of the normal binaural spatial hearing.

“The triple beamformer enhances the intra-aural differences that lead to spatial location and source segregation,” Kidd reported. He believes that this research approach is promising for this patient population.

The VGHA research is currently confined to the laboratory and computers perform the signal processing. In addition, for the technology to become commercially available, the portable system would have to be miniaturized and cosmetically acceptable in order to be worn on the head.

He anticipates that product develop might first be aimed at using the technology in the cochlear implant community.

Kidd concluded by expressing his hope that the research will lead to building a better hearing aid for solving situations such as the problem at the classical cocktail party.

"In addition, the technology is not intended only for people with hearing loss," he said. "We believe that even people with normal hearing might benefit from this technology and it could have wide application.”

Kidd has no financial interest in this technology.

References

1. Arbogast TL, Kidd G Jr. Evidence for spatial tuning in informational masking using the probe-signal method. J Acoust Soc Am 2000;108:1803–10.

2. Desloge JG, Rabinowitz WM, Zurek PM. Microphone-array hearing aids with binaural output. I. Fixed-Processing Systems. IEEE Trans Audio Speech Lang Process 1997;5, 529-542.

3. Kidd G Jr, Favrot S, Desloge J, et al. Design and preliminary testing of a visually-guided hearing aid. J Acoust Soc Am 2013;133, EL202–EL207.

4. Kidd G Jr, Jennings TR, Byrne AJ. Enhancing the perceptual segregation and localization of sound sources with a triple beamformer. J Acoust Soc Am 2020;148:3598; https://doi.org/10.1121/10.0002779

5. Yun D, Jennings TR, Kidd G Jr., Goupell MJ. Benefits of triple acoustic beamforming during speech-on-speech masking and sound localization for bilateral cochlear-implant users. J Acoust Soc Am 2021;149:3052-3072; doi: 10.1121/10.0003933

Newsletter

Want more insights like this? Subscribe to Optometry Times and get clinical pearls and practice tips delivered straight to your inbox.